Support Vector Machine for Triple Junction dataset

4.1. Support Vector Machine for Triple Junction dataset#

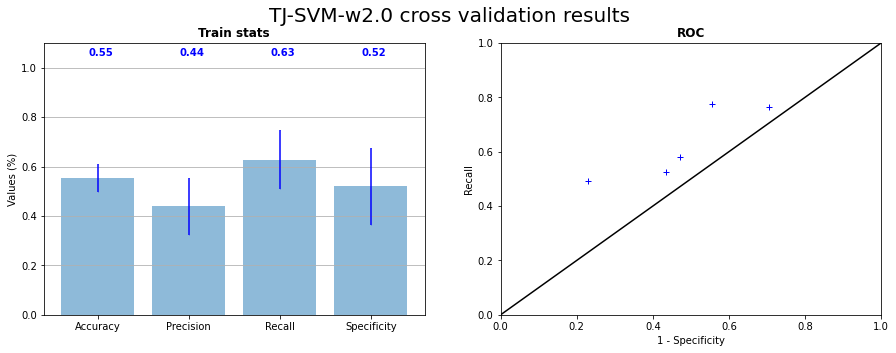

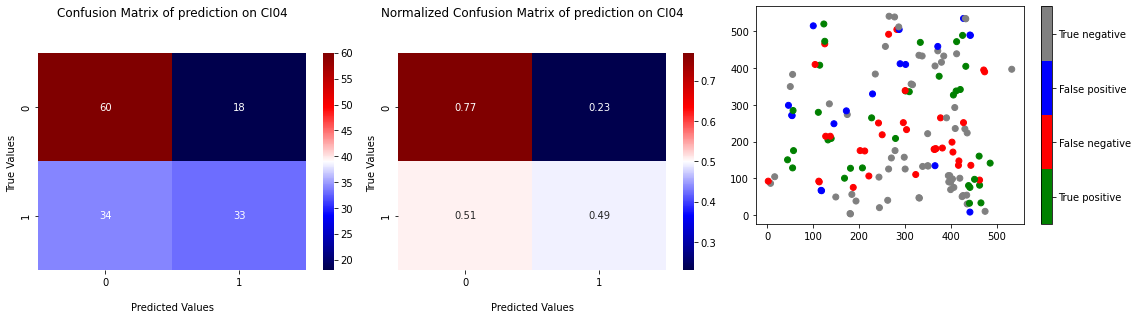

SVM being the best model of the previous strategy, we started to apply it to the TJ dataset. Coming from a dataset with many observations, considering only TJ reduce drastically the number of observations of the samples (see Table 2.3). This low number of observations amplify the difference of distribution between the 5 samples which leads to an increase of the variance of the cross validation results. We can see on Figure 4.1 this increase of the variance on recall and specificity. The points of tests on ROC curve are also really spaced and close to the \(x=y\) straight, that signify that the model is not really better than a random classifier. The Figure 4.2 shows the confusion matrix and the projection for the test on CI04.

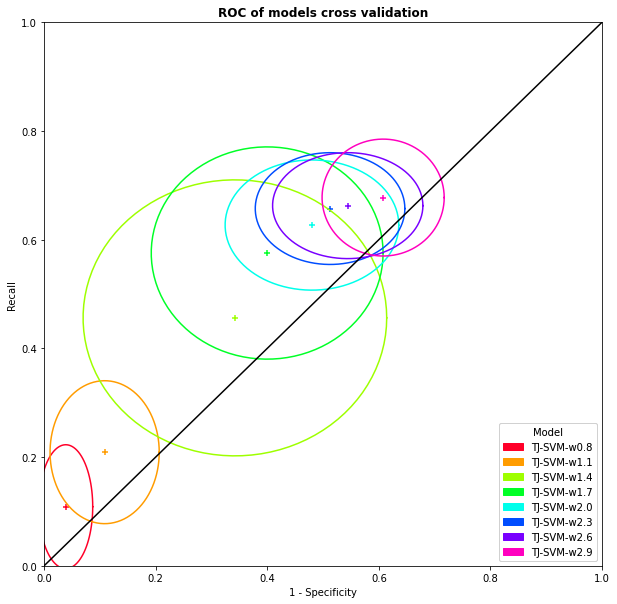

In view to involve a little results, we tried to modify classification class weights. The Figure 4.3 represents the results of the sevral cross validations done with different weight for the RX class. We can observe that better the huge variance of the results and that means never get out the \(x=y\) straight.

A little bit out of time, we choose to move on ANN models and not going further, this TJ tabular dataset that seems too poor in information to allow us to distinguish any trend.

Fig. 4.1 SVM RBF kernel cross validation on the 5 samples of TJ dataset statistics and corresponding ROC curve#

Fig. 4.2 Confusion matrix of prediction of SVM on CI04 TJ dataset with learning on the 4 others samples and projection of the prediction.#

Fig. 4.3 SVM on TJ dataset cross validation mean and standard deviation with variation of RX class weight#